How To Fine Tune Stability AI's Text-to-Image Model with A StreamLit App

Refine StabilityAI's text-to-image model using the Streamlit app in the cloud. Choose various engines, fine-tune parameters, and customize image size!

StabilityAI is an open source generative AI company with a focus on developing models for a diverse range of domains such as language, code, video, 3D content, and more. In this blog, we will take a closer look at the text-to-image model based on Stable Diffusion.

Discover how you can enhance the image generation process by fine-tuning the parameters using a cloud-based Streamlit application that I have built, conveniently accessible through Streamlit's community cloud platform that anyone can access. This user-friendly tool enables you to explore and adjust all the potential parameters of the text-to-image API, opening up a world of creative possibilities.

Text-to-Image Streamlit Application

Stability AI text-to-image is an image synthesis model that generates images for text prompts. Stability AI has released multiple Stable Diffusion models, with the latest one being Stable Diffusion XL 1.0.

The application requires at least two inputs:

- Stability API Key: The API which can be obtained from this link, Stability AI API Key

- Prompt: The text based on which the image will be generated.

The Streamlit cloud application can be launched by clicking on this link: https://stability-ai-text-to-image.streamlit.app/. Modify and experiment with different parameter values. Once the values are specified, click on the "submit" button and wait for the image to be displayed. To save the image, right click on the image and choose the "save image" option.

The snippet below demonstrates the Streamlit application.

Streamlit Cloud Based Stability AI's Text-to-Image Application

Fine Tuning Parameters

The following parameters can be fine tuned:

- Engine ID: Choose from the list of available engines including the latest SDXL v1.0 Release.

- Style Preset: Apply different style presets add style and consistency to the images

- Clip Guidance Preset: This paramter controls the extent to which the image generation process follows the text prompt. Higher values result in images closer to the prompt. For best results, use CLIP guidance with ancestral sampler.

- Sampler: Defines how to update a model based on an output of a pretrained model. If a specific sampler is not used, an appropriate sampler for the inference engine will be applied automatically.

Generated Images.

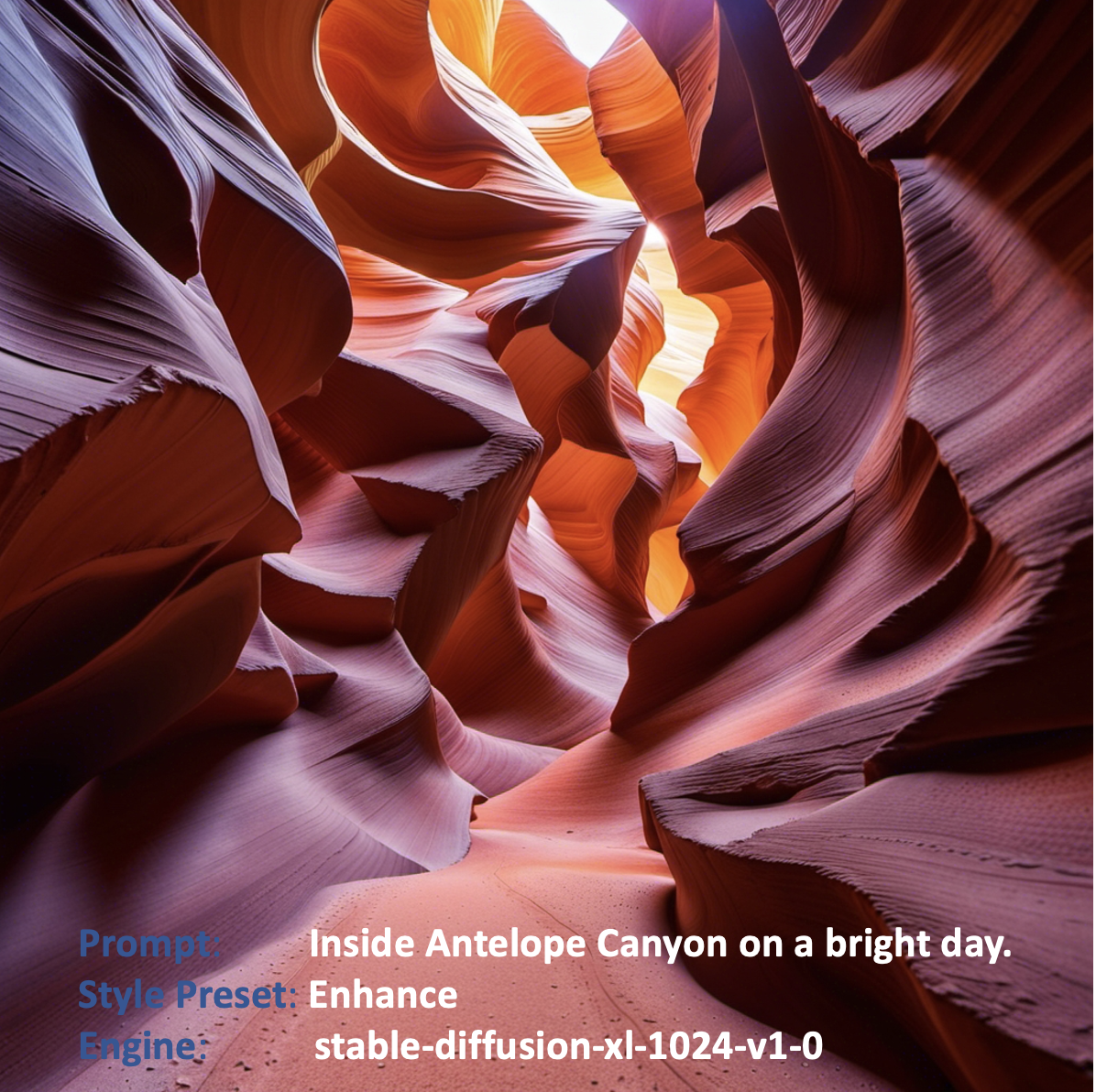

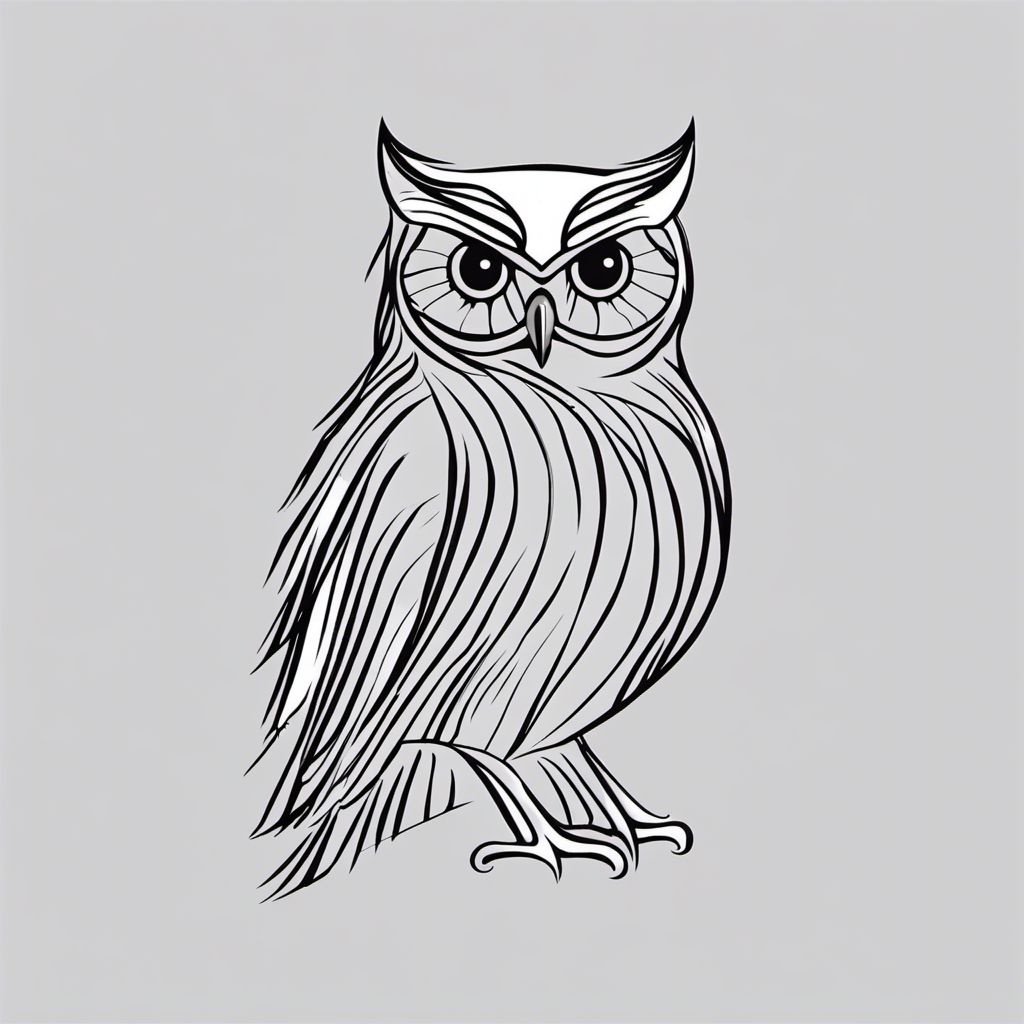

The images below have been generated to showcase different style presets. All the images below use the latest Stability AI engine Stable Diffusion-xl-1024-v1-0. Feel free to experiment with different prompts, different engines, and tune other parameters to generate different images.

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: Photographic

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: Origami

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: Enhance

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: 3d-Model

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: Anime

Engine: Stable Diffusion-xl-1024-v1-0, Style Preset: Line Art

The text-to-image Stable-Diffusion model offers a great solution for creating images from text descriptions. While various other models exist for text-to-image conversion, if you've chosen Stability AI's text-to-image model, you now have access to a cloud-based tool that comes with the added benefit of being entirely free to use. This application caters to a wide range of users, making it suitable and accessible for both casual creators and advanced users seeking sophisticated image generation capabilities. Whether you are a novice or a seasoned professional, this tool promises to meet your creative needs.